Hadoop 3 集群的部署(Ubuntu x64, Cloud)

软件版本

-

Cluster Node OS: Ubuntu 20.04 amd64

-

PC OS: Windows 10 amd64

-

Hadoop: 3.3.2

-

JDK: 1.8.0

准备工作

购买服务器

创建完成后得到如下信息:

| ID | 主机名 | 内网 IP | 外网 IP |

|---|---|---|---|

| 1a150e1f-424f-482f-9553-df981cd61302 | zzj-2019211379-0003 | 192.168.0.203 | 120.46.150.239 |

| 15b820fa-30c5-4a7b-b945-fcba50f35432 | zzj-2019211379-0004 | 192.168.0.101 | 120.46.142.190 |

| 4ad4d017-4ebd-4998-8c1f-a1e3f545073a | zzj-2019211379-0001 | 192.168.0.93 | 119.3.181.21 |

| 0e96a8cc-0c48-4979-b364-4c2322c2f082 | zzj-2019211379-0002 | 192.168.0.230 | 120.46.144.113 |

[PC] SSH 连接配置

c:\Users\i\.ssh\config:

Host zzj-2019211379-0003

HostName 120.46.150.239

RemoteForward 7890 localhost:7890

User root

Host zzj-2019211379-0004

HostName 120.46.142.190

RemoteForward 7890 localhost:7890

User root

Host zzj-2019211379-0001

HostName 119.3.181.21

RemoteForward 7890 localhost:7890

User root

Host zzj-2019211379-0002

HostName 120.46.144.113

RemoteForward 7890 localhost:7890

User root

可以追加下面两个配置,避免 SSH 经常超时。

ServerAliveInterval 20

ServerAliveCountMax 999

[Nodes] 禁用 cloud-init

cloud-init 会设置 /etc/hosts 文件,将主机名绑定到 127.0.0.1,导致我们的本机手动 IP 绑定失效。禁用它:

sudo touch /etc/cloud/cloud-init.disabled

**注意!**如果你后面使用

hostnamectl set-hostname xxx设置主机名,那么还会重新进行一次绑定,造成后续出现很多 BUG,届时需要手动删除对应表项。

[Nodes] pdsh

在所有 Node 执行:

apt install pdsh -y

printf "\nexport PDSH_RCMD_TYPE=ssh\n" >> ~/.bashrc

[Nodes] ssh key

在所有 Node 执行:

ssh-keygen -t rsa

在所有 Node 执行:

cat ~/.ssh/id_rsa.pub

结果汇总到一个文件:

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDXX...dmMGqa3WCuJLVm/0= root@zzj-2019211379-0001

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCrn...zPcrRqTcrjKd0Xnc= root@zzj-2019211379-0002

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDGV...HVWHBGZqbH6XW99M= root@zzj-2019211379-0003

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCq+...eQq+9U4yTTNWa++k= root@zzj-2019211379-0004

统统写入到 ~/.ssh/authorized_keys

cat << EOT >> ~/.ssh/authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCdy0FyS3C7tky69Cbjwm8OtwpIcp46vzfOj1RYeN9M21/xHbPe5Db4M9oRyck6DsfreHYBRS50iyADyoG7GsoqNzZAfpNBuRaRMc2c9KlimzVCooFwSj1pQM8laGWE42/fGpPjrgXYlZkJlX0Dgd/RIHtDkvOMQ8C/LJ8eRet+DQ9vFFQX5Fa3T5GT426GrLxFTaZXwt28P5eFLbUMm9/OoikwcmN6x1is7Ra3932Vk+DpL8D14npIDWT9M80HxVHlDTUkaZcYRhGdg2KfkRi9KurvvgZiyH79dzE/D+/EesfEkNuxALXwM00nsZnMI20zRZaPhdOR0/aNAz4li8BV PLUVETO

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDXXUcioVbCkK+M8eDFpgiFOn54U7+Q4Ndl6TdXcTCdk9gJfzBVLo3B/1OavNDBj+FZZETkD+D78yvE+r+vC3KtGI7IHGN06InIE44KGobS1W/j/akEwQPlj+5skIync1+BAK2+sQFO1BB9bxHakyaSeURYsiwsCTcmSypVDwB9wezFQrs9cQKNpx2R0ctYcvnsOOWQCMnxhC1Hf93TrRFJ50vZCaMYXZH0Mk0xAMm54l6OnA6JGyv06g3WQbW/g3LI5Q7BZA36Tf6vEZD5b4Mc40U1wGcvPW1OrneyQDnRBfbZ23KwUaPxUkuiGs0bR7E7jsq6xQsM6vGvayFadXnE40BI6d4A+ya2mNhDWk7IJ4wIgkB+nbpyBcMPlKG7HEawMqqtIZHguyv5RR75JnR1Q4ZLmFF/w+YrC9XrEHQu2Lm3HugHHOfzAo9tgAUDlw5w9TLy7Q06auEJsLtRSNPdR4tHdZV0hbxCJ3Vdfl99rnCXeE+dmMGqa3WCuJLVm/0= root@zzj-2019211379-0001

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCrnvLs4B5pTzOoWjoUMKQ8AnIcPhmdpxFyLXbj0WOKVDUIxWbyEGAcMhtCxjdbK29jmPhdFwMl7sgkDeVP+RZiAJMEqPsOkIIPdyOLzmnZKTtcOL74nT2havBfXxLMf+BCG7h1otLQhI62GTFbkO4yaDBnoWSnzXt176PtQlxdxMv2NN2lO16Q10RLQ6v8ST4jkyz55vJMLGsRi1Wuhx5nwOKo/HRcC5xN01RVKjn9+5xaQx+QRSsrrI2I1MEmduwNYqLKyytVsxajx8HRDZEDqjsK54rHDkI2pR1rqsNNwNsPdmMbPkpIGf/zx4y7WSIvKUoSKLWUAFKIi+BXr0fnk6NNUB6hHlhLKVZ7pMj1iohh+HvfrIDNJy/OtgeP4UWY9IOsK1xqLlBmjzSlKSfJz0Dj6ofu9+yr5vFUF7MSx8jYH8oN1/D8OKkikwc6J4fdeSAHG7dJol8LCLFcuM9OS2Y1BDMtt+FhDzik9seqrvqpoBwzPcrRqTcrjKd0Xnc= root@zzj-2019211379-0002

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDGVRFajhsgST7FK9Tu7A2fb3COzUx8W5J1CZH+h3PLJFRJg2bqSg99T01HEQQx2Agh9VpQRspYH9ahaJMdUIE9rZp9BimirazG0x3OGn1tvfEmXPHgPW5rF6BLJzDRA/U2PVYO1J6GhOXGH4MTkGcYquSGiliJf4oYgO7BUqX41FbWpHYAy624UE3sHNfUBZVlgOf8OXgVuK0U0qw9RO0TBOAZ17niOqHRJZ6pZBPwcvpy20CirwaWUB4W0etfJv4RSDVCFRoSuyg6J7TRI5QaKsk6Zro/rgS9tHsRjGYkWINhLH54yculloDt4A0tUzIaR9xYt9mEoXyDUklh20DHgAx99pqkQt9CNg0wEjo2LcrRzKFxDuwtB89AnD+5NmHF0C532coJyazweJd9hgV/iw/Ovpb6X03ImRDThjBaTWrR3x1B+qTyxjm3i0RfIsnCVuRCvTF3EiU9H19rpxoZY+4SYrI4NKkqjqxlxCfP2z1tJXtHVWHBGZqbH6XW99M= root@zzj-2019211379-0003

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCq+E502PBhYIQPeTLeNt9Fy5yqKwqnG99hZNbmUaqoXy6I2UJi/7XpTAM9pEchVAS6+dr0VuZsqqWs8W5Q2rBV7f6U56xEDOY08hIm0fiwWON8wX2KkpQoM2ziIwywHj09MnUp4oufzlGGL7ziNWxAZYi0FbU8+K6IKnXH/gYQ3dkmT1+Uj9lSRXoQPCbB2KJz6jYlK8dVwQuxSuycJn8kiVGpjo9CU9hNfIf8xg4ETHuOlFlNLKGDpMdcUqzrqFnr/8icY6H2Zu7TOQEFklhdbQN1WirzmwwpO0q0pSemmRl01rjBD+tcyhsvqsQzTZEspzsVAE+UInWxf8Gx1/xae9/EU4IqbYA+uS/dsnnx+rw2CsDRHEP+0x2mRLVhFSLIPgkNAXXe3sLNrdLpiEhrd+247/5OrWeza0fI7uKPYSfrZHVgTWHqMacQR1FqPDGzP7OjZYiUjEFAIGPQz8Zm03klM0glWFdB4Sf0mOwnWUhOT8reQq+9U4yTTNWa++k= root@zzj-2019211379-0004

EOT

[Master] Hosts

cat /etc/hosts

127.0.0.1 localhost

# The following lines are desirable for IPv6 capable hosts

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

127.0.1.1 localhost.vm localhost

192.168.0.203 zzj-2019211379-0003

192.168.0.101 zzj-2019211379-0004

192.168.0.93 zzj-2019211379-0001

192.168.0.230 zzj-2019211379-0002

验证:

ssh zzj-2019211379-0003

[Master] dnsmasq

一般我们需要配置各节点的 /etc/hosts,扩展性差。我们在 Master 安装一个解析服务来解决这个问题。

Linux DNS 请求处理流程如下:

test.com->/etc/hosts->/etc/resolv.conf->dnsmasqdnsmasq 处理流程如下:

dnsmasq->hosts.dnsmasq->/etc/dnsmasq.conf/dnsmasq.conf->resolv.dnsmasq.conf

安装(应该先安装,再停用 systemd-resolved,否则你会发现安装不了)

apt install dnsmasq -y

停用 ubuntu 自带的 systemd-resolved

sudo systemctl stop systemd-resolved

sudo systemctl disable systemd-resolved

sudo systemctl mask systemd-resolved

并移除链接:

ls -lh /etc/resolv.conf

lrwxrwxrwx 1 root root 39 Aug 8 15:52 /etc/resolv.conf -> ../run/systemd/resolve/stub-resolv.conf

sudo unlink /etc/resolv.conf

若要恢复:

sudo systemctl unmask systemd-resolved sudo systemctl enable systemd-resolved sudo systemctl start systemd-resolved

启用

systemctl start dnsmasq

查看状态

systemctl status dnsmasq

修改主要配置文件vi /etc/dnsmasq.conf,开头加上:

no-resolv

# Google's nameservers, for example

server=8.8.8.8

server=8.8.4.4

此处建议遵照你的云服务商提供的 DNS Server。可在修改前通过

systemd-resolve --status命令查看。

重启生效:

systemctl restart dnsmasq

在 Master 本机测试是否可用:

nslookup z.cn localhost

Server: localhost

Address: ::1#53

Non-authoritative answer:

Name: z.cn

Address: 54.222.60.252

dig A z.cn

; <<>> DiG 9.16.1-Ubuntu <<>> A z.cn

;; global options: +cmd

;; connection timed out; no servers could be reached

root@zzj-2019211379-0001:~# vi /etc/resolv.conf

root@zzj-2019211379-0001:~# dig A z.cn

; <<>> DiG 9.16.1-Ubuntu <<>> A z.cn

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 49596

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;z.cn. IN A

;; ANSWER SECTION:

z.cn. 900 IN A 54.222.60.252

;; Query time: 44 msec

;; SERVER: 8.8.8.8#53(8.8.8.8)

;; WHEN: Sun Mar 20 20:41:06 CST 2022

;; MSG SIZE rcvd: 49

在 Slave1 测试是否可用:(192.168.0.93 为 Master 的 IP)

nslookup z.cn 192.168.0.93

Server: 192.168.0.93

Address: 192.168.0.93#53

Non-authoritative answer:

Name: z.cn

Address: 54.222.60.252

[Slaves] nameserver

修改 Slaves 的 DNS 服务器:

printf "nameserver 192.168.0.93\n" >> /etc/resolvconf/resolv.conf.d/head

生效:

sudo resolvconf -u

检验:

nslookup z.cn

Server: 192.168.0.93

Address: 192.168.0.93#53

Non-authoritative answer:

Name: z.cn

Address: 54.222.60.252

看到 192.168.0.93#53 是 Master 的 Nameserver 地址,说明成功。

之前,我们在 Master 添加了各 Node 的 DNS 记录。因此可以交叉测试各个 Node 能否解析:

在所有 Node 执行

ping -w 1 zzj-2019211379-0003

ping -w 1 zzj-2019211379-0004

ping -w 1 zzj-2019211379-0001

ping -w 1 zzj-2019211379-0002

此后如果增加 Node,则域名解析方面,只需要在 Master 的 /etc/hosts 增加对应条目。

[Nodes] known hosts

在所有 Node 执行:

ssh zzj-2019211379-0001 \

&& ssh zzj-2019211379-0002 \

&& ssh zzj-2019211379-0003 \

&& ssh zzj-2019211379-0004

然后会让你确认指纹,打 yes 后 exit 退出执行下一个。

为了检验是否已经互信,执行:

pdsh -l root -w ssh:zzj-2019211379-000[1-4] "date"

zzj-2019211379-0002: Sun 20 Mar 2022 09:21:01 PM CST

zzj-2019211379-0001: Sun 20 Mar 2022 09:21:01 PM CST

zzj-2019211379-0003: Sun 20 Mar 2022 09:21:01 PM CST

zzj-2019211379-0004: Sun 20 Mar 2022 09:21:01 PM CST

[Nodes] OpenJDK

如果是 Debian 则需要安装 buster/updates 源:

deb http://mirrors.aliyun.com/debian-security buster/updates main

而 Ubuntu 已自带,可以直接执行:

pdsh -l root -w ssh:zzj-2019211379-000[1-4] "apt install openjdk-8-jdk -y"

检查:

pdsh -l root -w ssh:zzj-2019211379-000[1-4] "java -version"

zzj-2019211379-0003: openjdk version "1.8.0_312"

zzj-2019211379-0003: OpenJDK Runtime Environment (build 1.8.0_312-8u312-b07-0ubuntu1~20.04-b07)

zzj-2019211379-0003: OpenJDK 64-Bit Server VM (build 25.312-b07, mixed mode)

zzj-2019211379-0001: openjdk version "1.8.0_312"

zzj-2019211379-0001: OpenJDK Runtime Environment (build 1.8.0_312-8u312-b07-0ubuntu1~20.04-b07)

zzj-2019211379-0001: OpenJDK 64-Bit Server VM (build 25.312-b07, mixed mode)

zzj-2019211379-0002: openjdk version "1.8.0_312"

zzj-2019211379-0002: OpenJDK Runtime Environment (build 1.8.0_312-8u312-b07-0ubuntu1~20.04-b07)

zzj-2019211379-0002: OpenJDK 64-Bit Server VM (build 25.312-b07, mixed mode)

zzj-2019211379-0004: openjdk version "1.8.0_312"

zzj-2019211379-0004: OpenJDK Runtime Environment (build 1.8.0_312-8u312-b07-0ubuntu1~20.04-b07)

zzj-2019211379-0004: OpenJDK 64-Bit Server VM (build 25.312-b07, mixed mode)

DFS 组件的安装

[Nodes] Hadoop 下载解压

pdsh -l root -w ssh:zzj-2019211379-000[1-4] "wget -P ~ https://mirrors.huaweicloud.com/apache/hadoop/common/hadoop-3.3.2/hadoop-3.3.2.tar.gz"

pdsh -l root -w ssh:zzj-2019211379-000[1-4] "mkdir ~/modules && tar -xf hadoop-3.3.2.tar.gz -C ~/modules/ && rm hadoop-3.3.2.tar.gz"

[Nodes] hadoop-env.sh

# 确保目录存在

dir /usr/lib/jvm/java-8-openjdk-amd64

其中设置

pdsh -l root -w ssh:zzj-2019211379-000[1-4] "sed -i 's|# export JAVA_HOME=|export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/|g' ~/modules/hadoop-3.3.2/etc/hadoop/hadoop-env.sh"

并在主节点追加:

vi /etc/profile.d/hadoop.sh

export HADOOP_HOME="/var/local/hadoop/hadoop-3.3.2"

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HDFS_NAMENODE_USER="root"

export HDFS_DATANODE_USER="root"

export HDFS_SECONDARYNAMENODE_USER="root"

export YARN_RESOURCEMANAGER_USER="root"

export YARN_NODEMANAGER_USER="root"

[Nodes] 环境变量

cat << EOT > /etc/environment

PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:/var/local/hadoop/hadoop-3.3.2/bin:/var/local/hadoop/hadoop-3.3.2/sbin"

JAVA_HOME="/usr/lib/jvm/java-8-openjdk-amd64/jre"

EOT

[Master] core-site.xml

code /var/local/hadoop/hadoop-3.3.2/etc/hadoop/core-site.xml

1<?xml version="1.0" encoding="UTF-8"?>

2<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

3<!--

4 Licensed under the Apache License, Version 2.0 (the "License");

5 you may not use this file except in compliance with the License.

6 You may obtain a copy of the License at

7

8 http://www.apache.org/licenses/LICENSE-2.0

9

10 Unless required by applicable law or agreed to in writing, software

11 distributed under the License is distributed on an "AS IS" BASIS,

12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 See the License for the specific language governing permissions and

14 limitations under the License. See accompanying LICENSE file.

15-->

16

17<!-- Put site-specific property overrides in this file. -->

18

19<configuration>

20 <property>

21 <name>fs.obs.readahead.inputstream.enabled</name>

22 <value>true</value>

23 </property>

24 <property>

25 <name>fs.obs.buffer.max.range</name>

26 <value>6291456</value>

27 </property>

28 <property>

29 <name>fs.obs.buffer.part.size</name>

30 <value>2097152</value>

31 </property>

32 <property>

33 <name>fs.obs.threads.read.core</name>

34 <value>500</value>

35 </property>

36 <property>

37 <name>fs.obs.threads.read.max</name>

38 <value>1000</value>

39 </property>

40 <property>

41 <name>fs.obs.write.buffer.size</name>

42 <value>8192</value>

43 </property>

44 <property>

45 <name>fs.obs.read.buffer.size</name>

46 <value>8192</value>

47 </property>

48 <property>

49 <name>fs.obs.connection.maximum</name>

50 <value>1000</value>

51 </property>

52 <property>

53 <name>fs.defaultFS</name>

54 <value>hdfs://zzj-2019211379-0001:9820</value>

55 </property>

56 <property>

57 <name>hadoop.tmp.dir</name>

58 <value>/var/local/hadoop/hadoop-3.3.2/tmp</value>

59 </property>

60 <property>

61 <name>fs.obs.access.key</name>

62 <value>U6YSHXXWCOHJGCBCMOQG</value>

63 </property>

64 <property>

65 <name>fs.obs.secret.key</name>

66 <value>kjHdB4s1ldylm1sv0CwAC73UK075LCJ7E7qaBuAF</value>

67 </property>

68 <property>

69 <name>fs.obs.endpoint</name>

70 <value>obs.cn-north-4.myhuaweicloud.com:5080</value>

71 </property>

72 <property>

73 <name>fs.obs.buffer.dir</name>

74 <value>/var/local/hadoop/data/buf</value>

75 </property>

76 <property>

77 <name>fs.obs.impl</name>

78 <value>org.apache.hadoop.fs.obs.OBSFileSystem</value>

79 </property>

80 <property>

81 <name>fs.obs.connection.ssl.enabled</name>

82 <value>false</value>

83 </property>

84 <property>

85 <name>fs.obs.fast.upload</name>

86 <value>true</value>

87 </property>

88 <property>

89 <name>fs.obs.socket.send.buffer</name>

90 <value>65536</value>

91 </property>

92 <property>

93 <name>fs.obs.socket.recv.buffer</name>

94 <value>65536</value>

95 </property>

96 <property>

97 <name>fs.obs.max.total.tasks</name>

98 <value>20</value>

99 </property>

100 <property>

101 <name>fs.obs.threads.max</name>

102 <value>20</value>

103 </property>

104</configuration>

[Master] hdfs-site.xml

1<?xml version="1.0" encoding="UTF-8"?>

2<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

3<!--

4 Licensed under the Apache License, Version 2.0 (the "License");

5 you may not use this file except in compliance with the License.

6 You may obtain a copy of the License at

7

8 http://www.apache.org/licenses/LICENSE-2.0

9

10 Unless required by applicable law or agreed to in writing, software

11 distributed under the License is distributed on an "AS IS" BASIS,

12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 See the License for the specific language governing permissions and

14 limitations under the License. See accompanying LICENSE file.

15-->

16

17<!-- Put site-specific property overrides in this file. -->

18

19<configuration>

20 <property>

21 <name>dfs.replication</name>

22 <value>3</value>

23 </property>

24 <property>

25 <name>dfs.namenode.secondary.http-address</name>

26 <value>zzj-2019211379-0001:50090</value>

27 </property>

28 <property>

29 <name>dfs.namenode.secondary.https-address</name>

30 <value>zzj-2019211379-0001:50091</value>

31 </property>

32</configuration>

[Master] workers

在 2.x 中是 slave (奴隶制向资本主义的转变?)

zzj-2019211379-0001

zzj-2019211379-0002

zzj-2019211379-0003

zzj-2019211379-0004

[Master] 同步上述配置到工作节点

scp /var/local/hadoop/hadoop-3.3.2/etc/hadoop/* zzj-2019211379-0001:/var/local/hadoop/hadoop-3.3.2/etc/hadoop/

scp /var/local/hadoop/hadoop-3.3.2/etc/hadoop/* zzj-2019211379-0002:/var/local/hadoop/hadoop-3.3.2/etc/hadoop/

scp /var/local/hadoop/hadoop-3.3.2/etc/hadoop/* zzj-2019211379-0003:/var/local/hadoop/hadoop-3.3.2/etc/hadoop/

scp /var/local/hadoop/hadoop-3.3.2/etc/hadoop/* zzj-2019211379-0004:/var/local/hadoop/hadoop-3.3.2/etc/hadoop/

[Master] format namenode

source /etc/environment

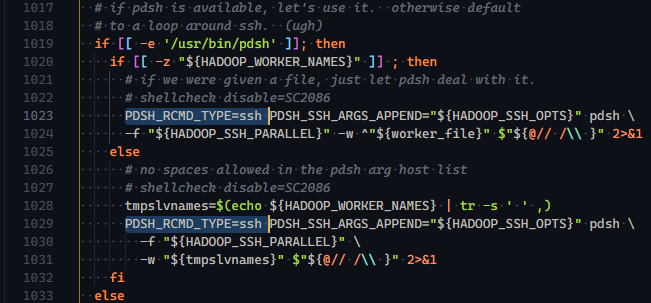

[Master] 启动 dfs

编辑 /var/local/hadoop/hadoop-3.3.2/libexec/hadoop-functions.sh,在以下两行增加 DSH_RCMD_TYPE=ssh 从而避免接下来的命令连接失败。

接下来:

start-dfs.sh

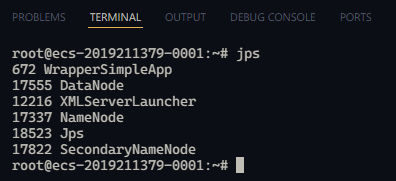

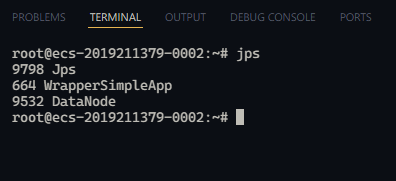

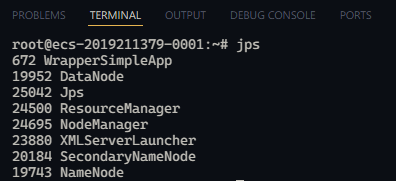

[Nodes] 检查状态 JPS

pdsh -l root -w ssh:zzj-2019211379-000[1-4] "jps"

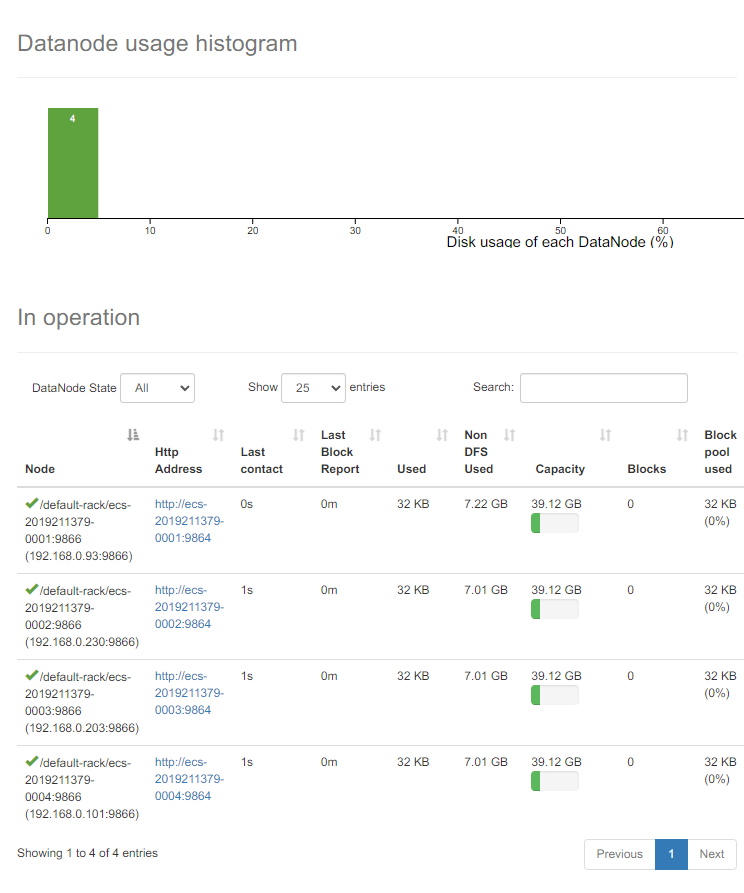

[Master] 检查状态 Web

访问 http://zzj-2019211379-0001:9870

YARN 组件的安装

[Nodes] yarn-site.xml

在有所有 Nodes 执行

1cat << EOT > /var/local/hadoop/hadoop-3.3.2/etc/hadoop/yarn-site.xml

2<?xml version="1.0"?>

3<!--

4 Licensed under the Apache License, Version 2.0 (the "License");

5 you may not use this file except in compliance with the License.

6 You may obtain a copy of the License at

7

8 http://www.apache.org/licenses/LICENSE-2.0

9

10 Unless required by applicable law or agreed to in writing, software

11 distributed under the License is distributed on an "AS IS" BASIS,

12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 See the License for the specific language governing permissions and

14 limitations under the License. See accompanying LICENSE file.

15-->

16

17<configuration>

18 <property>

19 <name>yarn.resourcemanager.hostname</name>

20 <value>zzj-2019211379-0001</value>

21 </property>

22</configuration>

23EOT

此处的 value 均填同一结点。

[Master] start-yarn

start-yarn.sh

[Master] 检查状态 JPS

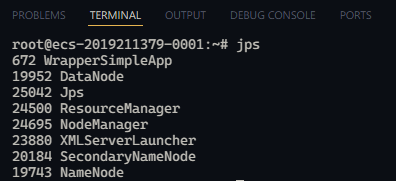

[Master] 检查状态 Web

访问 http://zzj-2019211379-0001:8088/cluster

MapReduce 组件的安装

使用 Java 编写 HDFS 客户端

[PC] 编写代码

关键代码:

1package com.less_bug.demo;

2

3import java.io.IOException;

4import java.net.URI;

5import java.net.URISyntaxException;

6import java.util.logging.Logger;

7

8import org.apache.hadoop.conf.Configuration;

9import org.apache.hadoop.fs.FileSystem;

10import org.apache.hadoop.fs.Path;

11

12public class HdfsClient {

13

14 private static final Logger LOGGER = Logger.getGlobal();

15

16 private String host;

17

18 public HdfsClient(String host) {

19 this.host = host;

20 }

21

22 public FileSystem getFileSystem() throws IOException, InterruptedException, URISyntaxException {

23 var configuration = new Configuration();

24 configuration.set("fs.defaultFS", host); // "hdfs://localhost:9000"

25 configuration.set("fs.hdfs.impl",org.apache.hadoop.hdfs.DistributedFileSystem.class.getName());

26 configuration.set("dfs.client.use.datanode.hostname", "true");

27 configuration.set("fs.file.impl",

28 org.apache.hadoop.fs.LocalFileSystem.class.getName());

29 // return FileSystem.get(configuration, "root");

30 var username = "root";

31 return FileSystem.get(new URI(host), configuration, username);

32 }

33

34 public void upload(String localPath, String remotePath) {

35 LOGGER.fine("Uploading file: " + localPath + " to " + remotePath);

36 Path localPathObj = new Path(localPath);

37 Path remotePathObj = new Path(remotePath);

38 try (var fs = getFileSystem()) {

39 fs.copyFromLocalFile(localPathObj, remotePathObj);

40 } catch (Exception e) {

41 LOGGER.severe("Error while uploading file: " + localPath + " to " + remotePath);

42 e.printStackTrace();

43 }

44 }

45

46 public void download(String localPath, String remotePath) {

47 LOGGER.fine("Downloading file: " + localPath);

48 Path localPathObj = new Path(localPath);

49 Path remotePathObj = new Path(remotePath);

50 try (var fs = getFileSystem()) {

51 fs.copyToLocalFile(remotePathObj, localPathObj);

52 } catch (Exception e) {

53 LOGGER.severe("Error while downloading file: " + localPath);

54 e.printStackTrace();

55 }

56 }

57

58 public void list(String remoteDir) {

59 LOGGER.fine("Listing files in " + remoteDir);

60 Path remotePathObj = new Path(remoteDir);

61 try (var fs = getFileSystem()) {

62 var status = fs.listStatus(remotePathObj);

63 for (var fileStatus : status) {

64 LOGGER.info(fileStatus.getPath().toString());

65 }

66 } catch (Exception e) {

67 LOGGER.severe("Error while listing files in " + remoteDir);

68 e.printStackTrace();

69 }

70 }

71

72}

完整代码位于 pluveto/hdfs-demo (github.com)

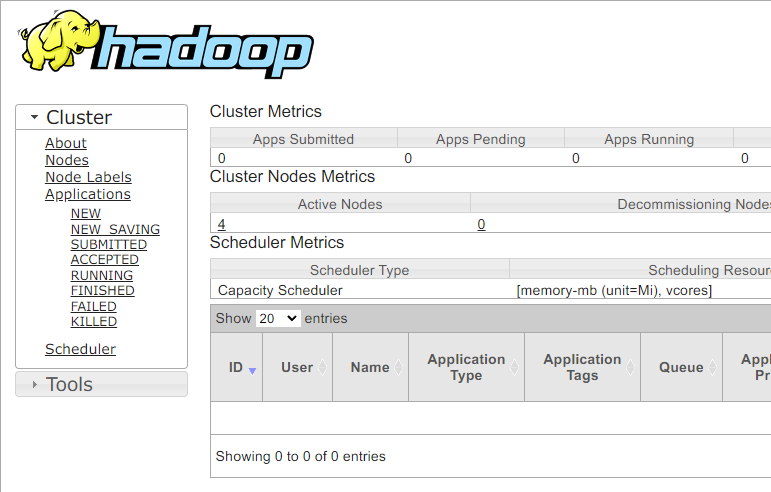

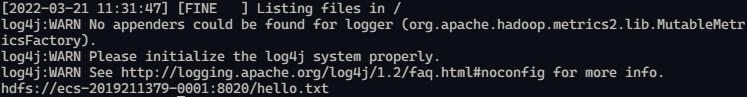

[PC] 上传和查看文件

以参数 --action upload --target /hello.txt --file hello.txt 执行上传文件

在 Web 查看文件 http://zzj-2019211379-0001:9870/explorer.html#/

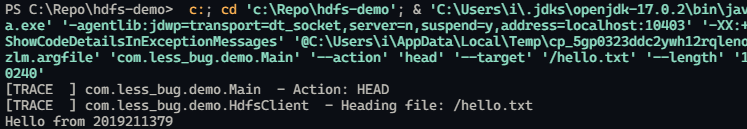

在本机列出文件(--action head --target /hello.txt --length 10240):

在本机查看文件(--action tail --target /hello.txt --length 10240)

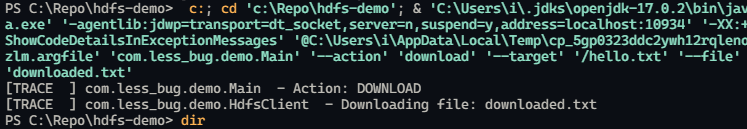

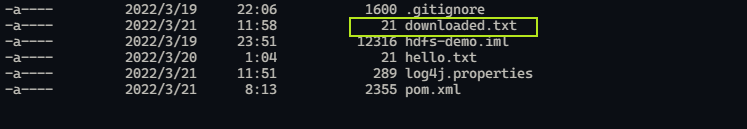

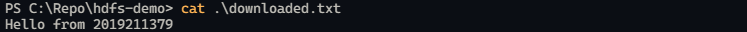

下载文件(--action download --target /hello.txt --file downloaded.txt)

附录:问题排查

Datanode Information 只显示两个 Node:

-

/default-rack/zzj-2019211379-0001:9866 (192.168.0.93:9866) -

/default-rack/localhost.vm:9866 (192.168.0.230:9866)

问题在于 /etc/hosts 有多余行,删除类似下面的行:

127.0.1.1 localhost.vm localhost

然后

stop-dfs.sh && start-dfs.sh

此时应能看到四个 Node.

缺少 8020 监听:是因为 namenode 没有启动。

hadoop namenode -format

Datanode 没有 / 数量不对

-

首先清理 tmp 目录(自设)

-

格式化 namenode

- hadoop namenode -format

子 datanode 一个都不在

-

检查

$HADOOP_HOME/etc/hadoop/workers对不对 -

检查

fs.defaultFS端口是否通畅。 -

Datanode denied communication with namenode because hostname cannot be resolved

- 只改 master,把 hosts 中的自己的主机名 ip 设为内网 ip

附录:Hadoop 相关端口

| 分类 | 应用 | Haddop 2.x | Haddop 3 |

|---|---|---|---|

| NameNode | Namenode | 8020 | 8020/9820 |

| NameNode | NN HTTP UI | 50070 | 9870 |

| NameNode | NN HTTPS UI | 50470 | 9871 |

| SecondaryNameNode | SNN HTTP | 50091 | 9869 |

| SecondaryNameNode | SNN HTTP UI | 50090 | 9868 |

| DataNode | DN IPC | 50020 | 9867 |

| DataNode | DN | 50010 | 9866 |

| DataNode | DN HTTP UI | 50075 | 9864 |

| DataNode | Namenode | 50475 | 9865 |

| YARN | YARN UI | 8088 | 8088 |

附录:Hadoop 2 相关端口

| 组件 | 节点 | 默认端口 | 配置 | 用途说明 |

|---|---|---|---|---|

| HDFS | DateNode | 50010 | dfs.datanode.address | datanode服务端口,用于数据传输 |

| HDFS | DateNode | 50075 | dfs.datanode.http.address | http服务的端口 |

| HDFS | DateNode | 50475 | dfs.datanode.https.address | http服务的端口 |

| HDFS | DateNode | 50020 | dfs.datanode.ipc.address | ipc服务的端口 |

| HDFS | NameNode | 50070 | dfs.namenode.http-address | http服务的端口 |

| HDFS | NameNode | 50470 | dfs.namenode.https-address | https服务的端口 |

| HDFS | NameNode | 8020 | fs.defaultFS | 接收Client连接的RPC端口,用于获取文件系统metadata信息。 |

| HDFS | journalnode | 8485 | dfs.journalnode.rpc-address | RPC服务 |

| HDFS | journalnode | 8480 | dfs.journalnode.http-address | HTTP服务 |

| HDFS | ZKFC | 8019 | dfs.ha.zkfc.port | ZooKeeper FailoverController,用于NN HA |

| YARN | ResourceManage | 8032 | yarn.resourcemanager.address | RM的applications manager(ASM)端口 |

| YARN | ResourceManage | 8030 | yarn.resourcemanager.scheduler.address | scheduler组件的IPC端口 |

| YARN | ResourceManage | 8031 | yarn.resourcemanager.resource-tracker.address | IPC |

| YARN | ResourceManage | 8033 | yarn.resourcemanager.admin.address | IPC |

| YARN | ResourceManage | 8088 | yarn.resourcemanager.webapp.address | http服务端口 |

| YARN | NodeManager | 8040 | yarn.nodemanager.localizer.address | localizer IPC |

| YARN | NodeManager | 8042 | yarn.nodemanager.webapp.address | http服务端口 |

| YARN | NodeManager | 8041 | yarn.nodemanager.address | NM中container manager的端口 |

| YARN | JobHistory Server | 10020 | mapreduce.jobhistory.address | IPC |

| YARN | JobHistory Server | 19888 | mapreduce.jobhistory.webapp.address | http服务端口 |

| HBase | Master | 60000 | hbase.master.port | IPC |

| HBase | Master | 60010 | hbase.master.info.port | http服务端口 |

| HBase | RegionServer | 60020 | hbase.regionserver.port | IPC |

| HBase | RegionServer | 60030 | hbase.regionserver.info.port | http服务端口 |

| HBase | HQuorumPeer | 2181 | hbase.zookeeper.property.clientPort | HBase-managed ZK mode,使用独立的ZooKeeper集群则不会启用该端口。 |

| HBase | HQuorumPeer | 2888 | hbase.zookeeper.peerport | HBase-managed ZK mode,使用独立的ZooKeeper集群则不会启用该端口。 |

| HBase | HQuorumPeer | 3888 | hbase.zookeeper.leaderport | HBase-managed ZK mode,使用独立的ZooKeeper集群则不会启用该端口。 |

| Hive | Metastore | 9085 | /etc/default/hive-metastore中export PORT= |

|

| Hive | HiveServer | 10000 | /etc/hive/conf/hive-env.sh中export HIVE_SERVER2_THRIFT_PORT= |

|

| ZooKeeper | Server | 2181 | /etc/zookeeper/conf/zoo.cfg中clientPort= |

对客户端提供服务的端口 |

| ZooKeeper | Server | 2888 | /etc/zookeeper/conf/zoo.cfg中server.x=[hostname]:nnnnn[:nnnnn],标蓝部分 | follower用来连接到leader,只在leader上监听该端口 |

| ZooKeeper | Server | 3888 | /etc/zookeeper/conf/zoo.cfg中server.x=[hostname]:nnnnn[:nnnnn],标蓝部分 | 用于leader选举的。只在electionAlg是1,2或3(默认)时需要 |